目标

docker-compose、k8s-yaml

准备镜像

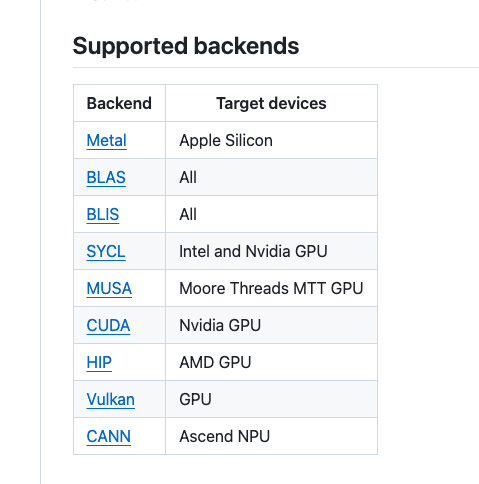

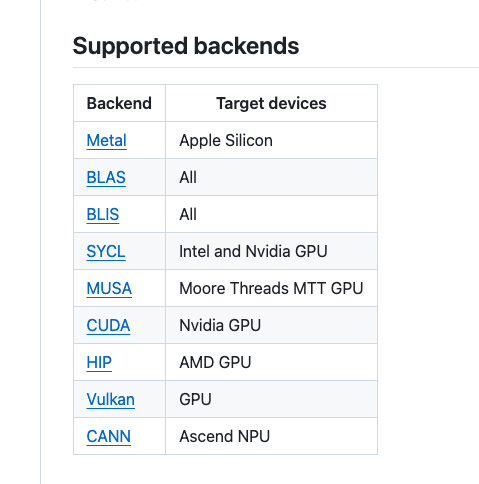

这里以llama.cpp的基础镜像作为参考。选择llama.cpp的原因是他支持非常多种类的加速卡,同时也支持cpu运行大模型。

使用官方已有镜像

如果机器环境的CUDA版本与官方镜像的兼容,可以直接使用官方镜像。

参考链接:https://github.com/ggerganov/llama.cpp/blob/master/docs/docker.md

如果有GPU,建议使用:ghcr.io/ggerganov/llama.cpp:server-cuda

如果没有gpu,建议使用:ghcr.io/ggerganov/llama.cpp:server

如果想要拉取默认的基础镜像为最新版本,check下最新的dockerfile文件,确认下是否与自己环境的cuda版本兼容。链接如下:https://github.com/ggerganov/llama.cpp/tree/master/.devops

如果最新的镜像不能满足需求,可以尝试搜索下历史镜像

可访问页面进行搜索:https://github.com/ggerganov/llama.cpp/pkgs/container/llama.cpp

以最新的镜像为例,具体命令如下:

1

2

|

docker pull ghcr.io/ggerganov/llama.cpp:server-cuda --platform linux/amd64 #如果镜像拉操作系统与最终操作系统不一致,需要加上--platform参数。如果一致,无需添加。

docker save -o llama.cpp.tar ghcr.io/ggerganov/llama.cpp:server-cuda #如果需要离线导入目标机器,可将镜像保存为tar包

|

自定义镜像

历史镜像可能不满足需求,可以尝试自定义镜像。这里以cuda 11.8.0为例。

查看nvidia/cuda镜像的tag,选择合适的版本。参考地址:https://hub.docker.com/r/nvidia/cuda/tags

- 准备llama.cpp镜像

1

2

3

4

5

6

7

8

|

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

vim .devops/cuda.Dockerfile # 修改对应的cuda版本,注意操作系统的版本也要和刚查到的镜像保持一致

docker build -t llama.cpp:server-cuda-11.8 .devops/cuda.Dockerfile

# 保存为tar包,方便离线导入目标机器

docker save -o llama.cpp-server-cuda-11.8.tar llama.cpp:server-cuda-11.8

# 也可选择push到自己的镜像仓库

docker push ${ your hub }/llama.cpp:server-cuda-11.8

|

Dockerfile示例:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

|

ARG UBUNTU_VERSION=20.04 # 修改为目标版本 # 如果目标版本为20.04,会出现时区问题和cmake版本过低问题,需要单独调整

# This needs to generally match the container host's environment.

ARG CUDA_VERSION=11.5.2 # 修改为目标版本

# Target the CUDA build image

ARG BASE_CUDA_DEV_CONTAINER=nvidia/cuda:${CUDA_VERSION}-devel-ubuntu${UBUNTU_VERSION} # 确保dockerhub中有该镜像

ARG BASE_CUDA_RUN_CONTAINER=nvidia/cuda:${CUDA_VERSION}-runtime-ubuntu${UBUNTU_VERSION} # 确保dockerhub中有该镜像

ARG DEBIAN_FRONTEND=noninteractive

FROM ${BASE_CUDA_DEV_CONTAINER} AS build

# CUDA architecture to build for (defaults to all supported archs)

ARG CUDA_DOCKER_ARCH=default

# 版本为20.04时,需要单独调整时区问题,需要调整部分

###########################################

#ENV TZ=Etc/UTC

# RUN apt-get update && \

# apt-get install -y tzdata wget tar build-essential python3 python3-pip git libcurl4-openssl-dev libgomp1 && \

# wget https://github.com/Kitware/CMake/releases/download/v3.31.5/cmake-3.31.5-linux-x86_64.tar.gz && \

# tar -zxvf cmake-3.31.5-linux-x86_64.tar.gz && mv cmake-3.31.5-linux-x86_64 /opt/cmake && \

# ln -s /opt/cmake/bin/cmake /usr/bin/cmake && cmake --version

###########################################

RUN apt-get update && \

apt-get install -y build-essential cmake python3 python3-pip git libcurl4-openssl-dev libgomp1

WORKDIR /app

COPY . .

RUN if [ "${CUDA_DOCKER_ARCH}" != "default" ]; then \

export CMAKE_ARGS="-DCMAKE_CUDA_ARCHITECTURES=${CUDA_DOCKER_ARCH}"; \

fi && \

cmake -B build -DGGML_NATIVE=OFF -DGGML_CUDA=ON -DLLAMA_CURL=ON ${CMAKE_ARGS} -DCMAKE_EXE_LINKER_FLAGS=-Wl,--allow-shlib-undefined . && \

cmake --build build --config Release -j$(nproc)

RUN mkdir -p /app/lib && \

find build -name "*.so" -exec cp {} /app/lib \;

RUN mkdir -p /app/full \

&& cp build/bin/* /app/full \

&& cp *.py /app/full \

&& cp -r gguf-py /app/full \

&& cp -r requirements /app/full \

&& cp requirements.txt /app/full \

&& cp .devops/tools.sh /app/full/tools.sh

## Base image

FROM ${BASE_CUDA_RUN_CONTAINER} AS base

RUN apt-get update \

&& apt-get install -y libgomp1 curl\

&& apt autoremove -y \

&& apt clean -y \

&& rm -rf /tmp/* /var/tmp/* \

&& find /var/cache/apt/archives /var/lib/apt/lists -not -name lock -type f -delete \

&& find /var/cache -type f -delete

COPY --from=build /app/lib/ /app

# 此处删除了light full 等不必要的镜像信息,仅留了server信息

### Server, Server only

FROM base AS server

ENV LLAMA_ARG_HOST=0.0.0.0

COPY --from=build /app/full/llama-server /app

WORKDIR /app

HEALTHCHECK CMD [ "curl", "-f", "http://localhost:8080/health" ]

ENTRYPOINT [ "/app/llama-server" ]

|

也可选择将llama.cpp仓库fork,修改为目标版本的Dockerfile,然后使用github的工作流去构建镜像。可参考镜像仓库:https://github.com/Williamyzd/appbuilder.git

可直接使用的tag:https://github.com/Williamyzd/appbuilder/pkgs/container/appbuilder%2Fllama.cpp

准备模型

docker-compose撰写

k8s-yaml撰写

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: qwen2-5-14b-gpu

namespace: llms

labels:

app: qwen2-5-14b-gpu

spec:

replicas: 1 # 副本数量

selector:

matchLabels:

app: qwen2-5-14b-gpu

template:

metadata:

labels:

app: qwen2-5-14b-gpu

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/cluster-role

operator: In

values:

- slave

- master-compute

# - key: kubernetes.io/gpu-type-name

# operator: In

# values:

# - Tesla-P4

containers:

- name: qwen2-5-14b-gpu-gpu

image: 10.233.0.100:5000/llama_cpp_gpu_12_2:cuda12.2_img-csbhtgdrp9ts131y # 使用的镜像

imagePullPolicy: IfNotPresent # 镜像拉取策略

#command: ["nvidia-smi","&&","tail", "-f","/dev/null"]

env:

- name: LLAMA_ARG_MODEL

value: "/models/qwen2.5-14b-instruct-q5_k_m.gguf"

- name: LLAMA_ARG_PORT

value: "8000"

- name: LLAMA_ARG_UBATCH

value: "4096"

- name: LLAMA_ARG_HOST

value: "0.0.0.0"

- name: LLAMA_ARG_N_PARALLEL

value: "2"

- name: LLAMA_ARG_N_PREDICT

value: "4096"

- name: LLAMA_ARG_CTX_SIZE

value: "12000"

- name: LLAMA_ARG_N_GPU_LAYERS

value: "10"

# # vgpu 资源限制

# - name: USE_GPU

# value: "1"

# - name: GPU_NUM

# value: "100"

resources:

requests:

memory: "1Gi" # 请求的内存大小

cpu: "1" # 请求的 CPU 大小(以毫核为单位)

limits:

memory: "64Gi" # 限制的内存大小

cpu: "8"

securityContext:

privileged: false

runAsGroup: 0

runAsUser: 0

ports:

- containerPort: 8000 # 容器暴露的端口

volumeMounts:

- mountPath: /dev/shm

name: cache-volume

- mountPath: /models

# subPath: v-wa9c73y3ge0wrvmm/org/william/qwen

name: llm-models

volumes:

- name: cache-volume

emptyDir:

medium: Memory

sizeLimit: "4Gi"

# - name: gluster-llms

# persistentVolumeClaim:

# claimName: gluster-llms

### 此处根据实际填写

- hostPath:

path: /models

type: Directory

name: llm-models

---

apiVersion: v1

kind: Service

metadata:

name: qwen2-5-14b-gpu

namespace: llms

spec:

selector:

app: qwen2-5-14b-gpu # 确保这与 Deployment 中 Pod 的标签相匹配

ports:

- protocol: TCP

port: 8000 # Service 监听的端口

targetPort: 8000 # Pod 内应用程序监听的端口

nodePort: 8512 # Node 上开放的端口

type: NodePort # Service 类型设置为 NodePort

|

- 此处使用hostpath挂载模型,可根据实际环境选择更适合的存储方式。

- 注意对hostpath挂载的位置做调整