1. 准备基础镜像

一般情况,可以参考:[GitHub Action] 自动化创建,vllm构建过程所需的磁盘和内存资源较大,github action提供的免费资源不足(4c16g,14 ssd)会导致镜像构建失败。需要使用自己的服务器

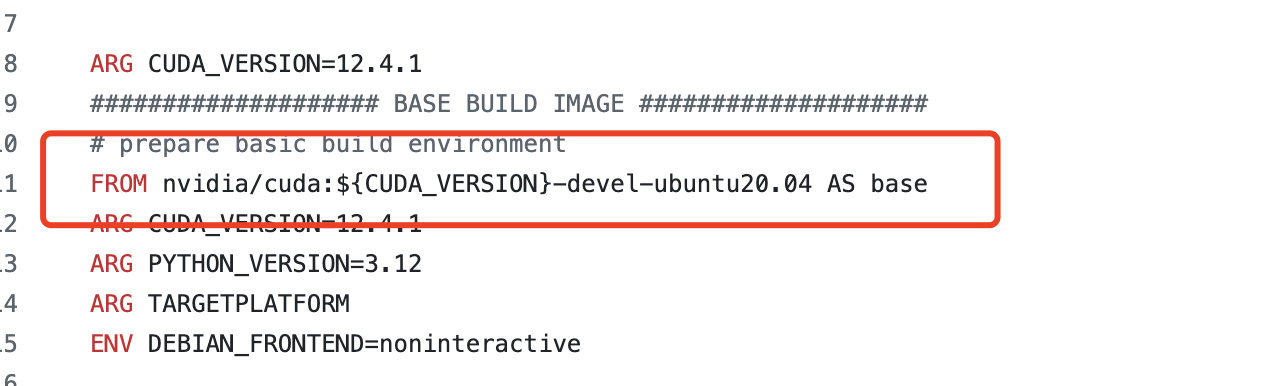

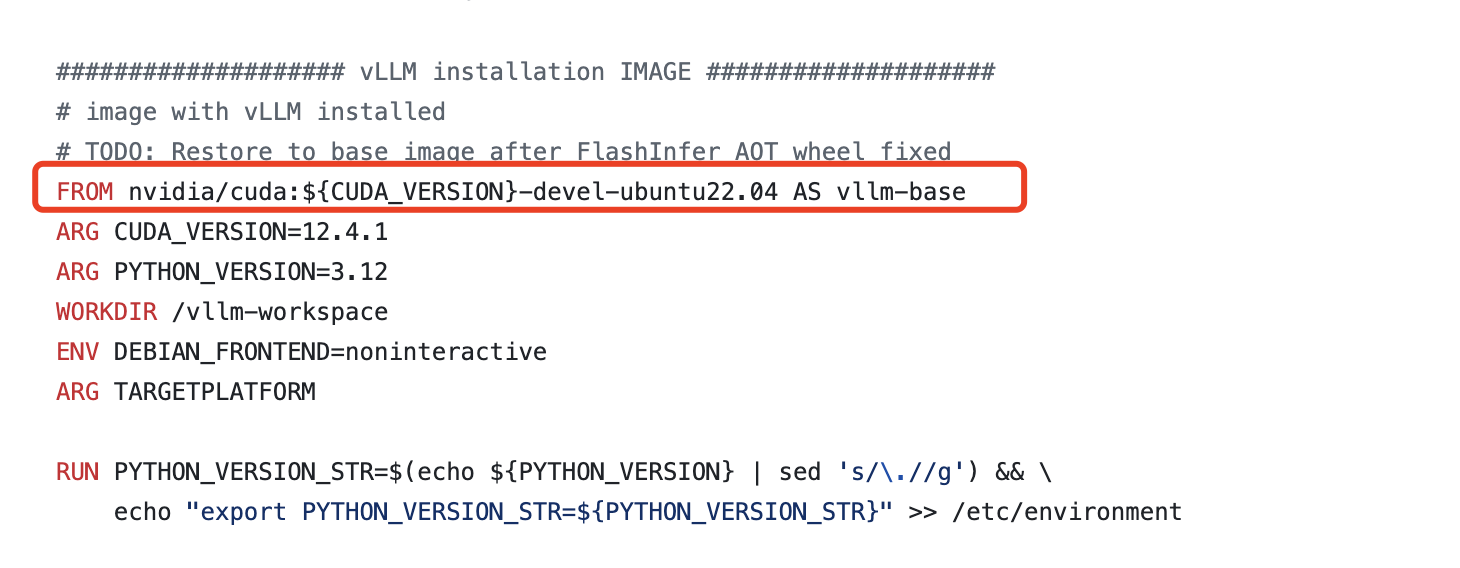

参考https://github.com/vllm-project/vllm/blob/main/Dockerfile

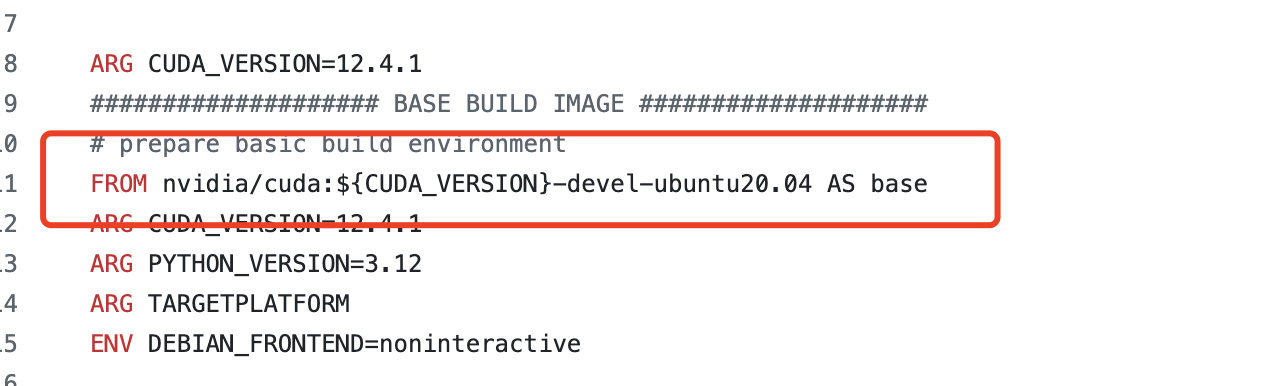

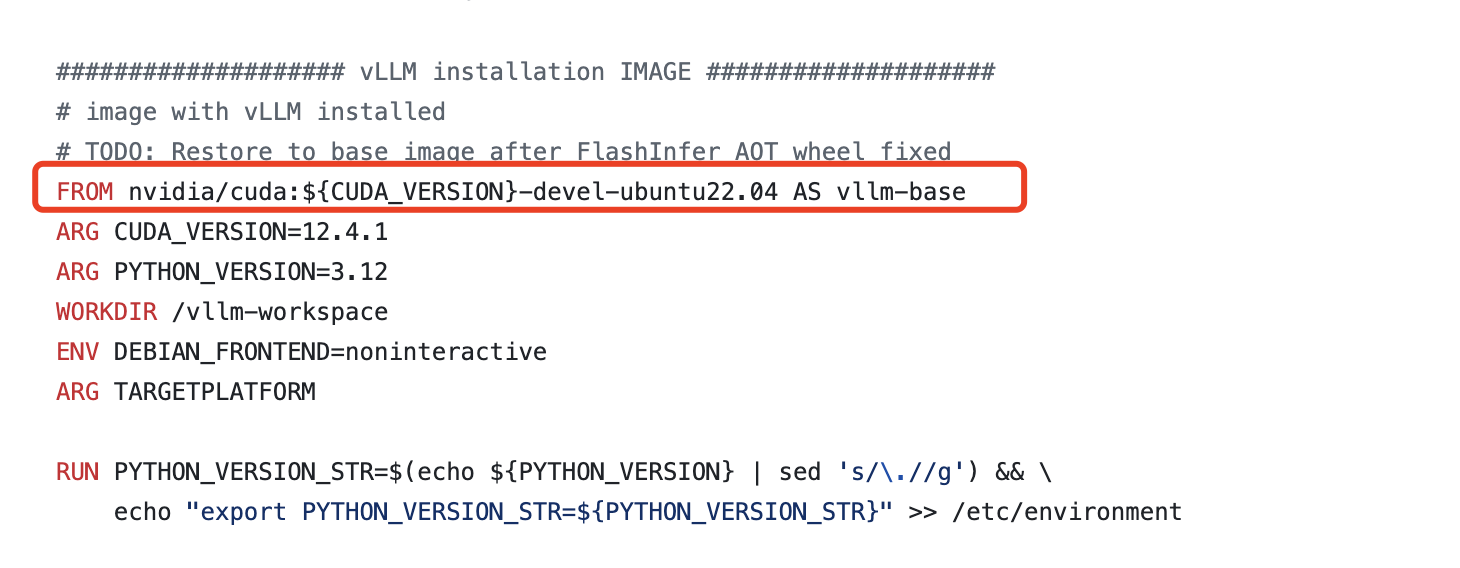

可知,所需的基础镜像如下:

如目标cuda版本为12.1.1,则需要的基础镜像为:

nvidia/cuda:12.1.1-devel-ubuntu20.04

nvidia/cuda:12.1.1-devel-ubuntu22.04

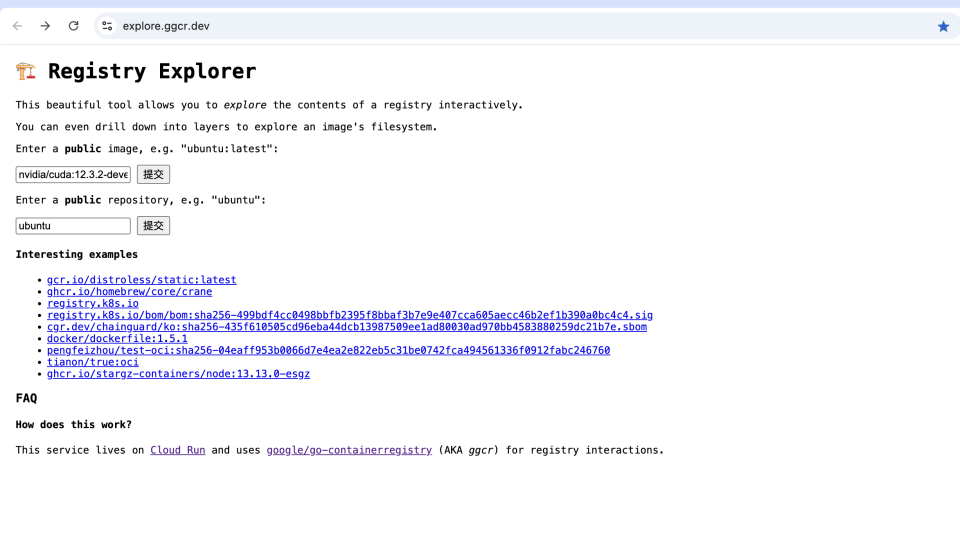

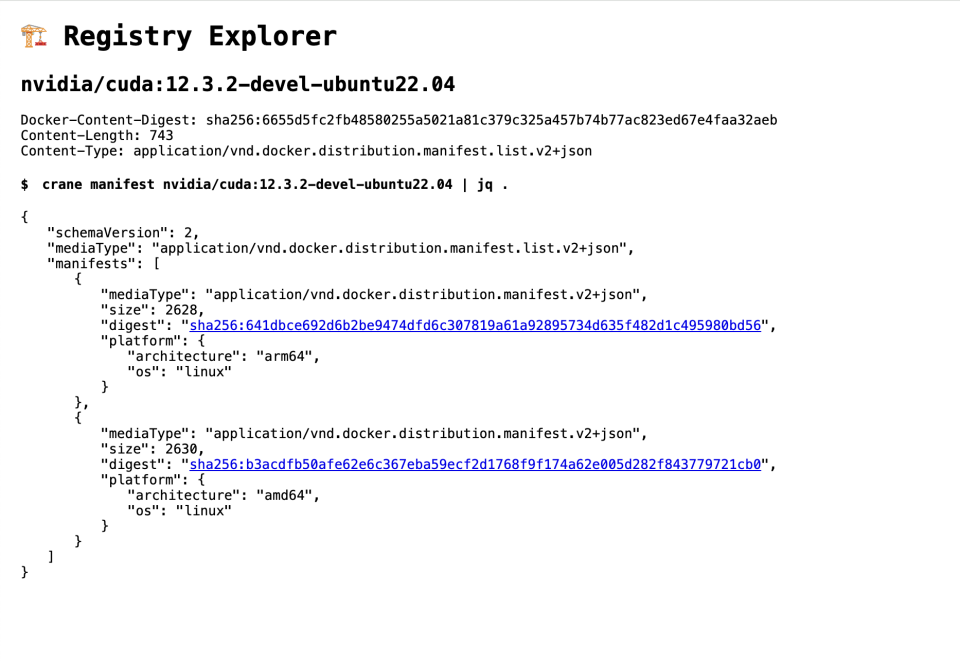

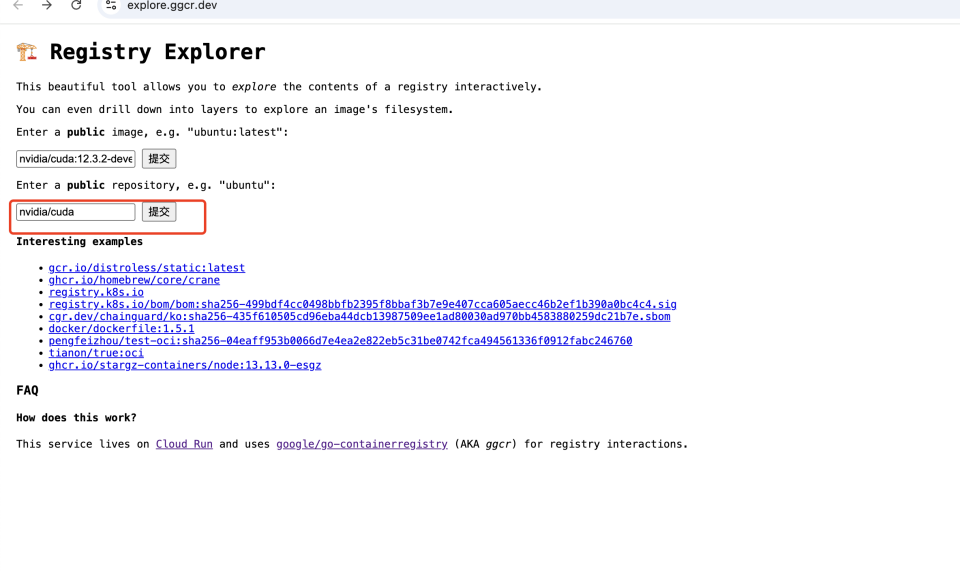

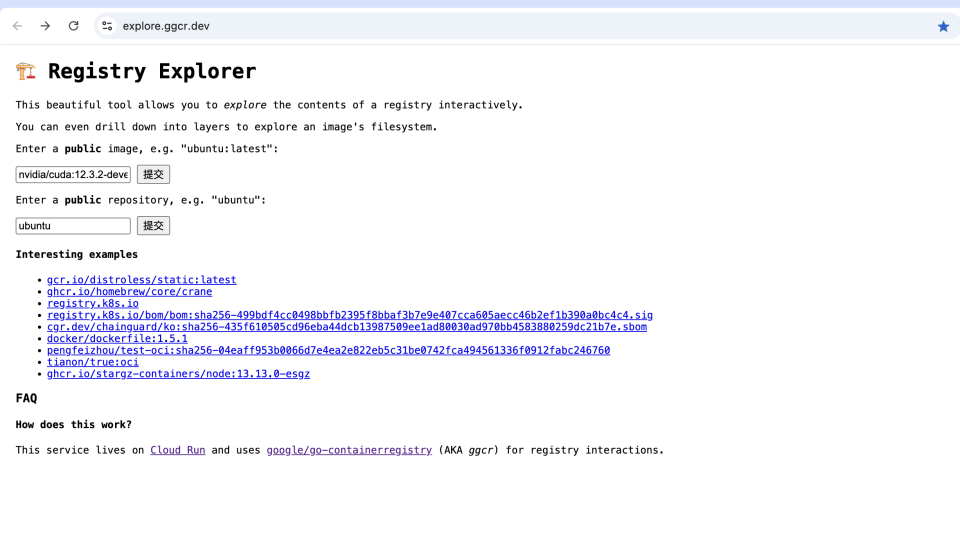

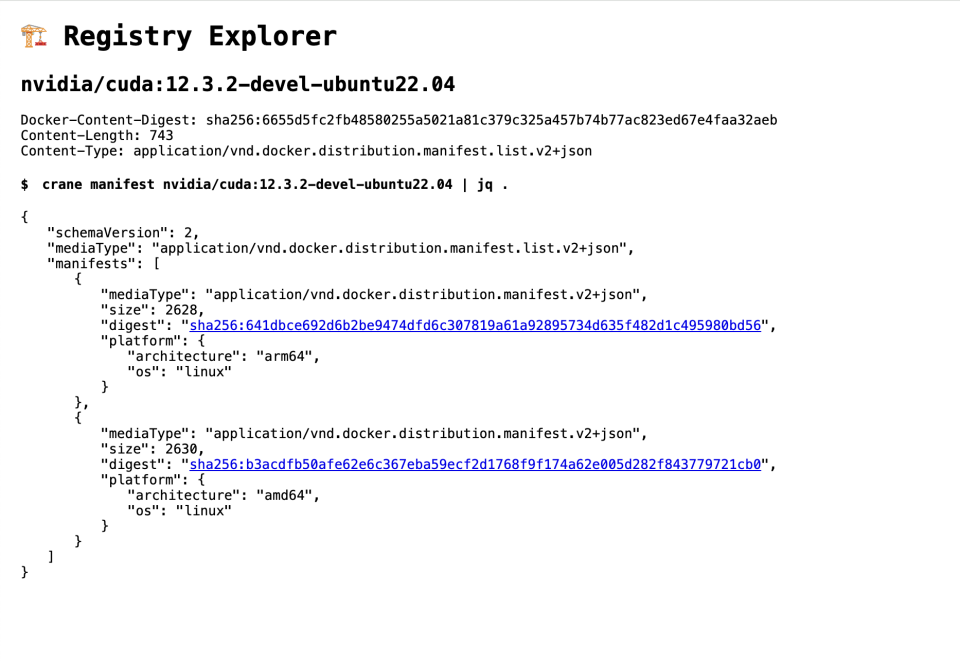

https://explore.ggcr.dev/

以上镜像组合式存在的。如果不存在,可在该网站查询相近的镜像组合

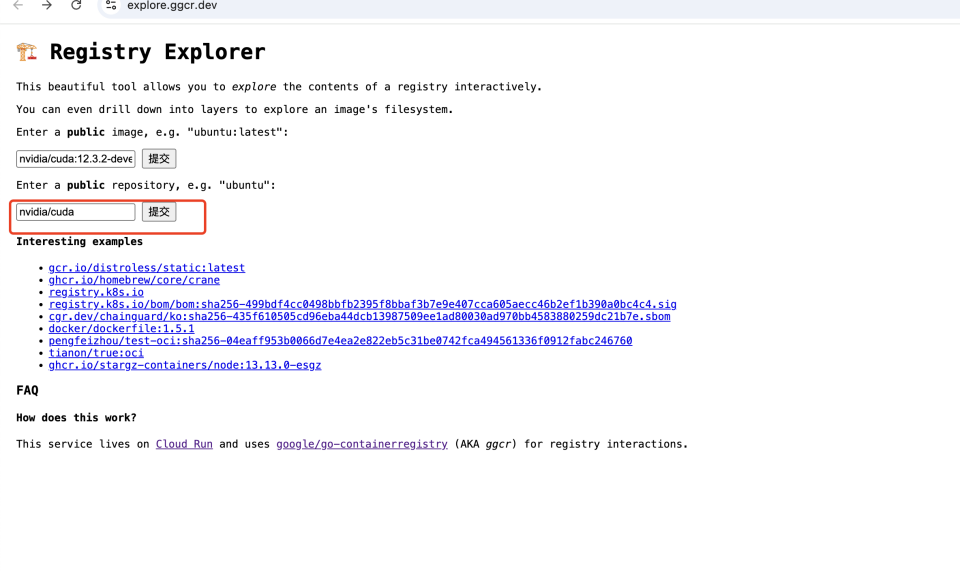

如镜像列表:https://explore.ggcr.dev/?repo=nvidia%2Fcuda

如找不到目标基础镜像或相邻镜像也不满足要求,可以考虑构建自己的基础镜像,见nvidia/cuda代码库:https://gitlab.com/nvidia/container-images/cuda 。 这里暂不展开

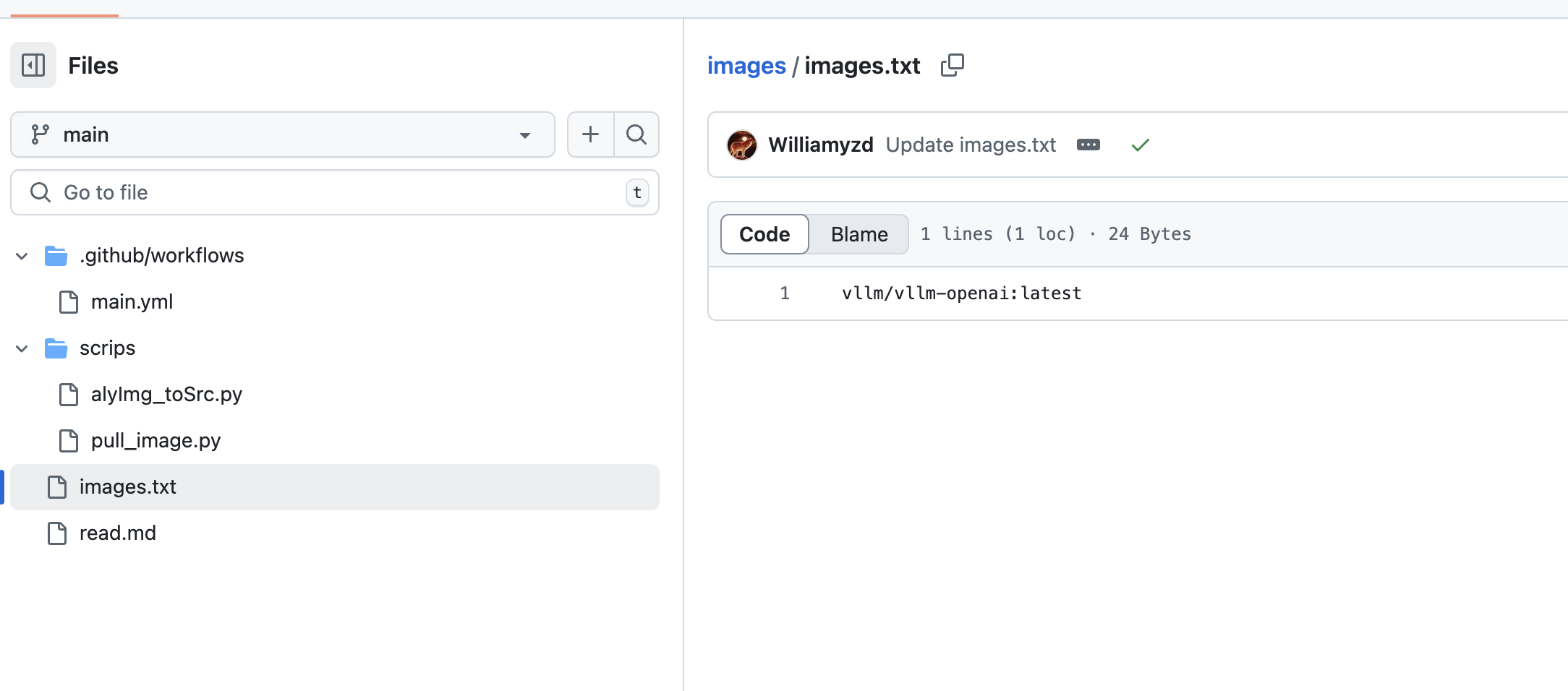

2. 同步基础镜像到国内镜像仓库(可选,如果具备外网服务器或可访问官方的镜像仓库,可不做)

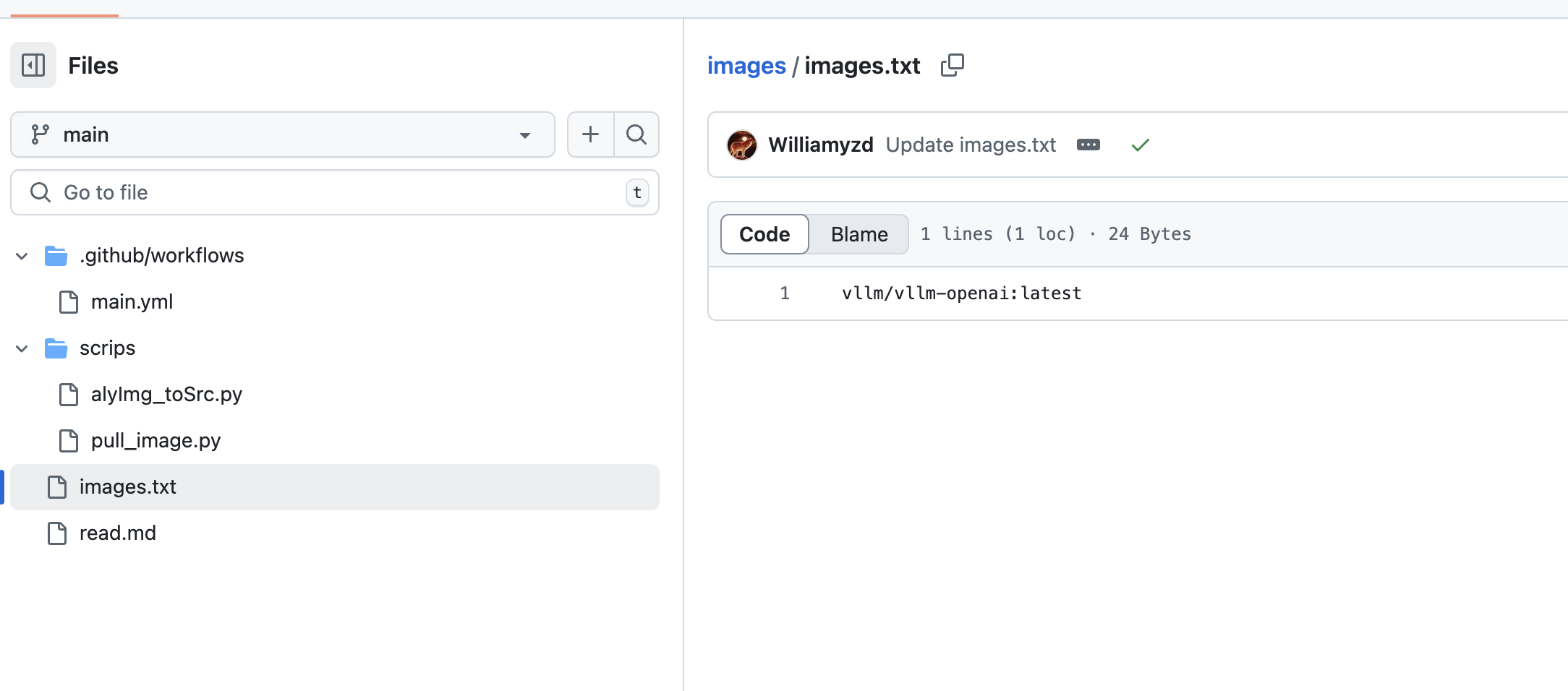

使用github工作流自动同步:

-

所有工作流yaml:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

# 工作流名称

name: Sync-Images-to-Aliyun-CR

# 工作流运行时显示名称

run-name: ${{ github.actor }} is Sync Images to Aliyun CR.

# 怎样触发工作流

on:

push:

branches: [ "main" ]

# Allows you to run this workflow manually from the Actions tab

workflow_dispatch:

# 工作流程任务(通常含有一个或多个步骤)

jobs:

syncimages:

runs-on: ubuntu-latest

steps:

- name: Checkout Repos

uses: actions/checkout@v4

- name: Login to Aliyun CR

uses: docker/login-action@v3

with:

registry: ${{ vars.ALY_REGISTRY}}

username: ${{ secrets.ALY_UNAME }}

password: ${{ secrets.ALY_PASSWD }}

logout: false

- uses: actions/setup-python@v5

with:

python-version: '3.10'

- run: python scrips/pull_image.py

|

-

对应处理镜像代码:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

|

# -*- coding: utf-8 -*-

import os,re,subprocess,datetime,json

lines = []

rs = []

ds_reg = 'registry.cn-hangzhou.aliyuncs.com/reg_pub/'

with open('images.txt', 'r') as f:

lines = f.read().split('\n')

for img in lines:

# ims = re.split('\s+', img)

# p =None

# if len(ims) > 1 and len(ims[1]) > 0:

# p = ims[1]

if len(img) > 0:

ims = img.replace('/', '_')

ds = ds_reg + ims

cmds = 'skopeo copy --all docker://' + img + ' docker://' + ds

code,rss = subprocess.getstatusoutput(cmds)

print('*'*20+'\n'+"copy {} to {}".format(img, ds) + '\n' + "rs:\n code:{}\n rs:{}".format(code, rss) )

if code != 0:

cmds = 'docker pull ' + img + ' && docker tag ' + img + ' ' + ds + ' && docker push ' + ds + ' && docker rmi {} {} '.format(img,ds)

code,rss = subprocess.getstatusoutput(cmds)

print('skopeo copy error, try docker pull and push:' + "\n" + cmds + '\n' + "rs:\n code:{}\n rs:{}".format(code, rss))

r = {

'src': img,

'ds': ds,

'code': code,

'rs': rss

}

rs.append(r)

ctime =datetime.datetime.now().strftime('%Y-%m-%d-%H:%M:%S')

with open('result-{}.json'.format(ctime), encoding='utf-8', mode='w') as f:

json.dump(rs, f)

print(r)

|

3. 准备Dockerfile

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

|

# 注意确认镜像中的操作系统版本+cuda版本是否存在,查看:https://explore.ggcr.dev/?repo=nvidia%2Fcuda

FROM nvidia/cuda:12.1.1-devel-ubuntu22.04 AS vllm-base

# cuda版本:vllm目前只支持cuda 12.4、12.1、11.8 其他版本需要自己编译

ARG CUDA_VERSION=121

# vllm版本

ARG VLLM_VERSION=0.8.1

# 防止需要交互卡主打包过程

ENV DEBIAN_FRONTEND=noninteractive

# 更新环境

RUN apt update && \

apt install -y build-essential zlib1g-dev libncurses5-dev libgdbm-dev libnss3-dev libssl-dev libsqlite3-dev libreadline-dev libffi-dev libbz2-dev liblzma-dev tk-dev wget

# 安装python,如需变更python版本这里调整

RUN wget https://www.python.org/ftp/python/3.12.9/Python-3.12.9.tgz && \

tar -zxvf Python-3.12.9.tgz && \

cd Python-3.12.9 && \

./configure --enable-optimizations --prefix=/usr/local/python3.12 && make -j$(nproc) && \

make altinstall && \

ln -s /usr/local/python3.12/bin/python3.12 /usr/bin/python3 && \

ln -s /usr/local/python3.12/bin/pip3.12 /usr/bin/pip3 && \

ln -s /usr/local/python3.12/bin/pip3.12 /usr/bin/pip && \

rm -rf Python-3.12.9.tgz Python-3.12.9

# 设置PATH

ENV PATH="/usr/bin:/usr/local/python3.12/bin:$PATH"

# 安装vllm,注意所需版本,如无合适的whl需要考虑自己编译源码

RUN wget https://github.com/vllm-project/vllm/releases/download/v${VLLM_VERSION}/vllm-${VLLM_VERSION}+cu${CUDA_VERSION}-cp38-abi3-manylinux1_x86_64.whl

RUN pip install vllm-${VLLM_VERSION}+cu${CUDA_VERSION}-cp38-abi3-manylinux1_x86_64.whl --extra-index-url https://download.pytorch.org/whl/cu${CUDA_VERSION}

RUN rm -f vllm-${VLLM_VERSION}+cu${CUDA_VERSION}-cp38-abi3-manylinux1_x86_64.whl

ENTRYPOINT ["python3", "-m", "vllm.entrypoints.openai.api_server"]

|

4. 打镜像并推送

核心命令如下:

1

2

3

|

docker build --tag vllm/vllm-openai . # 如服务器配置较高,可以使用该参数--build-arg max_jobs=8 --build-arg nvcc_threads=2 或针对性调整,如资一般,不建议使用

docker tag vllm/vllm-openai registry.cn-hangzhou.aliyuncs.com/reg_pub/vllm_vllm-openai:cuda-12.1.1-20250320

docker push registry.cn-hangzhou.aliyuncs.com/reg_pub/vllm_vllm-openai:cuda-12.1.1-20250320

|